This week we discussed the tricky topic of Open Access in academia. As a young scholar I find myself in a position of seeing the challenges of both sides of this "issue." On the one hand I can see where as a precarious young scholar, making my work widely available could lead to others easily … Continue reading Open Access

Tag: digital humanities

Digital Humanities Pedagogy

Of all the possible topics within the Digital Humanities, pedagogy is by far my favorite! I love exploring ways to not only incorporate Digital Humanities-based skills and tools into my teaching, but also ways of teaching the field of DH itself. I've had the opportunity to observe several styles of teaching Digital Humanities over the … Continue reading Digital Humanities Pedagogy

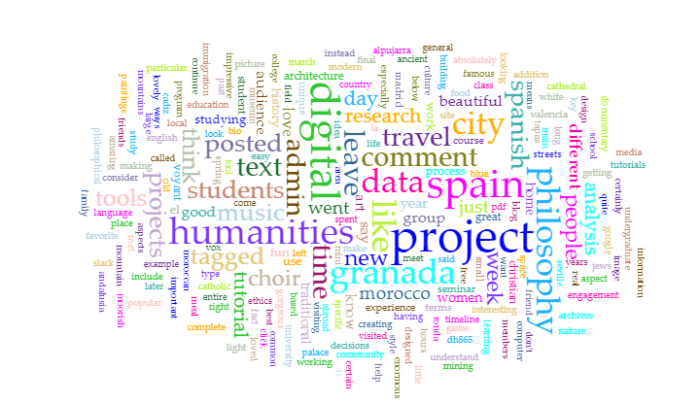

Text Mining

A Word Cloud of all the words I have used in all of my blogs. I will shout from the heavens that I love voyant-tools! Before Voyant I didn't have much of a concept or appreciation for text mining. I was first introduced to Voyant by Laura McGrath when I was a TA for the … Continue reading Text Mining

Visualization and Networks

This week focused on visualization tools with an emphasis on relationships or networks within data. I decided to pursue my more law-focused passions and see what such tools could show about the current state of immigration within the United States. After some quick searching I came across the United States government's Department of Homeland Security … Continue reading Visualization and Networks

Spatial and Temporal Visualizations

One of my favorite types of projects in Digital Humanities is mapping. Mapping can tell stories, argue, and reveal different perspectives. The readings from this week talked about several tools and platforms I have used before, but I was surprised by the sheer number of other tools of which I had not heard! The volume … Continue reading Spatial and Temporal Visualizations

Audience Engagement

This week in the DH865 course we were delayed by a true Polar Vortex, hence a delay in this post. However, the focus of the week, audience engagement, is one that can never be delayed when creating a digital project (in my opinion). I argue that it should drive the research project, and be one … Continue reading Audience Engagement

Data in Humanities

Archives, Data, and Humanities: A Philosopher's Reflections This week our Digital Humanities seminar served as a good reminder of the possibilities and breadth of data potential in humanities fields. Miriam Posner’s blog “Humanities Data: A Necessary Contradiction” was not only an excellent introduction to the notion of all objects bearing metadata, but also a further … Continue reading Data in Humanities

Tools and Reviewing Digital Projects in Philosophy

Digital Tools and Reviewing Digital Projects in Philosophy This week in DH865 we discussed various approaches to digital project review within our areas of study. Additionally we were asked to find digital tools that might contribute to and foster digital work in our focus areas. Admittedly I have a pessimistic view of philosophy's relationship to … Continue reading Tools and Reviewing Digital Projects in Philosophy

Documentaries Tutorial

One of the biggest undertakings in the digital humanities is a documentary project. These are time-consuming and require a plethora of skills and organization. As you may have noticed from my own documentary that I made a sophomore, I am anything but a professional. But, having gone through the process, I am aware of the … Continue reading Documentaries Tutorial

Text & Data Analysis Part 3: RAWGraphs Tutorial

Some of the first ventures into the field now known as the Digital Humanities began when humanists wanted to use computers to read and analyze large bodies of text. Starting with Father Busa’s computer-readable project of Aquinas, today some of the most popular DH projects are text and data analysis-based. This tutorial highlights how to … Continue reading Text & Data Analysis Part 3: RAWGraphs Tutorial